RAG vs. Finetuning: Enhancing LLMs with new knowledge

Brad Nikkel

Imagine if you had to absorb all the knowledge you needed for your entire life by some arbitrary cutoff; let’s say third grade. After that, no speech you hear, film you watch, conversation you partake in, or book you read sinks into your noggin. Language Models (LM) are a bit like this.

Assuming a vanilla setup, LMs “train” on information, learning everything up front. They must then harness the knowledge they trained on for the remainder of their existence (often until they’re phased out by a newer, larger model trained on even more data).

Since some Large Language Models (LLMs) have devoured a non-trivial portion of the entire internet, we might take comfort in the likelihood that most LLMs are more broadly knowledgeable than any single human could ever hope to be. At a minimum, this gives LLMs enough utility to be useful to us. But human knowledge expands rapidly (and so too does the internet along with it), so it’d be nice if the LLMs we increasingly rely on consistently tapped into new knowledge too.

An open and active area of research and experimentation seeks to do just this. Let’s explore two current approaches: fine-tuning (i.e., continued training) and Retrieval Augmented Generation (RAG), which is a fusion of Information Retrieval (IR) concepts with LMs. By understanding the differences between finetuning and RAG and learning where each flourishes and flops, we’ll gain an appreciation for the complexities involved in imparting new knowledge to LMs.

🎹 Finetuning Language Models

First up is “finetuning.” Most current LM are based on transformer variants, which require “pretraining” on a large corpus of documents to learn statistical relationships between words. Whatever a LM learns is stored in the weights of its underlying artificial neural network.

Finetuning is simply training an already trained LM on additional data at a later date so it can readjust its neural network’s weights to address the new input. Fastai’s Jeremy Howard, ever a proponent of finetuning and transfer learning, aptly dubs finetuning as just more pretraining.

The key difference is that the data you finetune a model on is often more specific than the more general data that LM ingested during its initial training.

📈Advantages of Finetuning Language Models

Finetuning allows LMs to specialize on specific domains or tasks. Bloomberg, for example, trained a model on financial data (BloombergGPT); OpenAI finetuned ChatGPT on question-answer pairs; GPT-4chan and DarkBert—ostensibly developed for research purposes—are GPT and BERT models finetuned on 4chan and a Dark Web corpora, respectively.

Your imagination is really your limit here. You can finetune LMs on poetry, emojis, your own journal, alt text, Klingon, or whatever language-based data you can conjure. Visual, audio, and multimodal models are all fair game for finetuning as well.

Beyond allowing LMs to niche down, finetuning enjoys the added benefit of allowing for smaller models and cheaper training and inference costs. You can often take a large pre-trained LM, for example, and finetune it on a small fraction of the data that it was initially trained on and still get great performance on domain-specific applications.

🔧 Disadvantages of Finetuning Language Models

While finetuning might sound like a panacea, it’s not without its shortcomings. First, though finetuning is faster than training a LM from scratch, it still takes time. Not the least of which is gathering and prepping new data to finetune on. Perhaps an organization like Google could finetune an LLM on a near-daily basis, but much of the information humans grapple with is even more temporal than daily data (weather and geopolitics, for example, can turn on a dime, and when they do, some folks need to know about it sooner than it’d take to finetune an LM).

Beyond not sufficiently addressing LM’s timeliness problem, repeated finetuning can induce catastrophic forgetting. Since artificial neural networks’ weights store the knowledge they learned during training and since these weights are later updated during finetuning, if you finetune a model enough times, it can outright “forget” some of its prior knowledge. This, of course, leaves issues like hallucinations unaddressed. Finetuned LMs can also “memorize” data, which might not seem like a problem (certainly better than forgetting) memorization can cause privacy issues (if the training data wasn’t sufficiently cleaned), and when LMs memorize data, they don’t generalize well to text that differs significantly from their training corpus.

🐶 Retrieval Augmented Generation (RAG)

Ok, so now we know that finetuning tailors LMs toward more specialized fields, but we can’t exactly constantly finetune LMs just to keep their knowledge timely. So how else might we address LMs’ timeliness and hallucination problems?

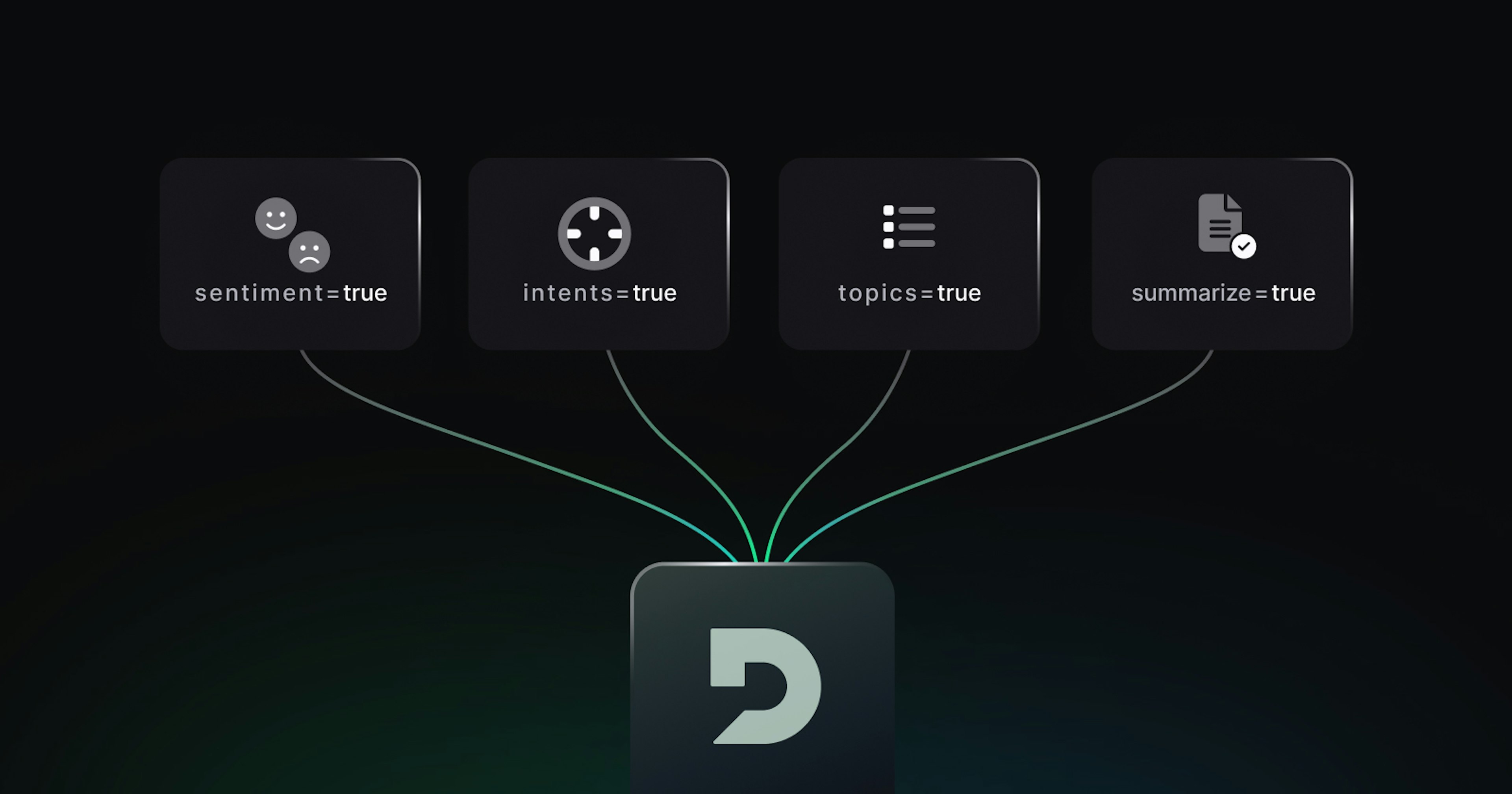

What if LMs could access a collection of external documents instead of only relying on their internal knowledge? This is essentially achieved by Retrieval Augmented Generation (RAG), a concept formally introduced in a 2020 Facebook (now Meta) paper. Coinciding with LLMs’ recent rise in capabilities and popularity is a growing recognition of their flaws, which has spurred experimentation on the initial RAG concept. While different RAG variants offer plenty of details to get lost in, we’ll focus on RAG’s broad processes.

First, an overview. RAG is similar to checking out a few books from a library (a document collection or database), skimming through their table of contents and indices to gauge if they might have the information you’re interested in (retrieval), and if so, reading the relevant passages to help inform whatever you’re trying to learn (generation). Parametric memory is the inner knowledge stored within an artificial neural network (i.e., what LMs already know without consulting external sources), and external, non-parametric knowledge is stored beyond LMs’ weights (e.g., in a database) but can still be accessed by the LM. Luis Lastras, IBM Research’s director of language technologies, provides a useful way to think about LMs’ parametric and non-parametric memory retrieval, likening raw LM retrieval to a closed-book exam and RAG to an open-book exam.

How does this all work? Broadly, RAG involves two key steps:

Retrieval

Generation (with retrieved documents)

First, for RAG to work well, LMs need to know when they don’t know something. This is key for the RAG’s retrieval step. If, for example, a LM leans too hard on its inner, parametric knowledge, it’ll rarely look anything up, making that LM prone to hallucinate or reply like a sycophant. While we want LMs to harness external knowledge via RAG, if LMs constantly consult external, non-parametric knowledge, they’ll waste their inner, parametric knowledge, getting bogged down in constant information retrieval. Imagine consulting a book, article, or website to shape your every thought—no one does that. Instead, we strike an imperfect yet useful balance between using whatever knowledge we’ve engrained internally and whatever relevant external knowledge we can scrounge up. RAG, ideally, ought to do the same.

Retrieval often starts with embedding documents, websites, databases, or other data into vector representations—not unlike the way that LMs embed words—so that machines can ingest them. For applications where security is of little concern, augmented data could be the internet (e.g., Bing’s generative search). Security-minded applications and applications where accuracy is vital (e.g., enterprise applications), however, would restrict their augmented data to a smaller, secured, and vetted pool.

Next, a user’s question is also embedded. Then the user’s embedded question is compared to embedded documents (or paragraphs, sentences, etc.). Some amount of sufficiently similar external knowledge is then appended to the user’s query via some prompt engineering. With this additional context, LMs often generate more accurate responses than they would have without external information. RAG approaches usually store external data in a vector database because vector databases facilitate finding information relevant to the query quickly.

Processes can even be setup to continuously improve RAG. You can, for example, store RAG’s generated answers in the same vector database as the other external documents. These then act like cached answers because when a LM receives a sufficiently similar query later on, the LM can retrieve its former answer (now an embedded document) and use it as context to generate new answers.

🚀 Advantages of RAG

RAG addresses many LM flaws. First, it partially overcomes LMs timeliness problem since data can easily and quickly be added to whatever database an LM has access to; it’s certainly a faster and cheaper way to do so than routinely finetuning LMs.

Next, RAG significantly decreases hallucinations. And even when LMs using RAG do make mistakes, RAG allows LMs to cite their sources, which in turn lets us identify and correct or delete unreliable information that the LM retrieved. Also, since RAG allows LMs to store a significant portion of their “knowledge” in an external source, you can reduce some of the security leakage concerns that accompany storing sensitive data within LMs’ weights. Additionally, the ability to access a large database of current data partially addresses LMs’ context window limitations and RAG is generally cheaper than constant finetuning (depending on how many documents one needs to embed for RAG and on how large some finetuning corpus is)

🐌 Disadvantages of RAG

As promising as RAG seems, it’s not without challenges. As we touched on earlier, researchers are still figuring out how to get LMs to reliably know when to consult outside, non-parametric knowledge and when to lean on their inner, parametric knowledge. Looking up literally everything is prohibitively slow and reduces LMs’ utility, so we don’t want RAG to go overboard on the retrieval portion. Nor do we want LMs to always go with their gut. Figuring out a happy medium is perhaps RAG’s greatest challenge.

There are more considerations, though. If a LM decides to consult outside sources, how should it do so? Should the LM consider each document? Or should each document be chunked into pages, paragraphs, or sentences so the LM can query more granular information? Again, we need a happy medium. This decision impacts how to vectorize LMs’ external knowledgebase.

Similarly, what sources should a model consult? The entire internet, or some subset of it? A handcrafted set of locally stored documents? And how many documents should RAG retrieve? All relevant documents?

Often, RAG retrieves the top n documents (where n is some natural number), which seems reasonable, but what if the nugget of knowledge needed to answer a query lies in the n+1 document? Current RAG approaches often use approximate nearest neighbor approaches (because pure nearest neighbors can result in consulting too many documents), but approximate nearest neighbors isn’t guaranteed to return a useful document for some query, so the hallucination issue remains, even if lessened.

Finally, RAG is more expensive than simply querying a LM for its internal knowledge because RAG requires embedding and storing the external data that the LM can consult, often incurring vector database storage costs (but RAG remains cheaper than routine finetuning) and RAG can increase inference costs since it appends retrieved documents to queries. All these decisions and considerations are application-dependent, of course, but you can see how complex implementing RAG can get.

😯 Finetuning and Retrieval Augmented Generation are Not Mutually Exclusive

For clarity, we’ve reviewed finetuning LMs and RAG independently, but they’re not mutually exclusive. There’s nothing stopping you from setting up RAG with a finetuned model or combining other IR techniques with LMs.

RAG and finetuning offer complementary strengths and weaknesses for keeping LMs timely and reducing problematic behaviors like hallucinations. Finetuning helps specialize LMs toward specific domains, vocabulary, or novel data but takes time and compute, risks catastrophically forgetting, and doesn’t address the web’s quickly evolving nature. RAG taps into external knowledge to increase factuality and timeliness but still faces challenges around optimal retrieval.

Neither approach fully resolves all LM shortcomings, but both help address some immediate concerns. As LLMs continue permeating our digital experiences, we can expect further experimentation combining finetuning, RAG, and other IR techniques to impart LLMs with more timely, factual knowledge.

Progress will likely hinge on finding the right blend of approaches for different use cases. For organizations with ample resources and domain-specific applications, training foundational, general LMs and then routinely finetuning them on niche data sets might be optimal. Scrapy startups or researchers navigating resource constraints, however, might prefer starting with smaller, general LMs that utilize RAG to access an external knowledge base.

We humans meld internal and external knowledge. So it seems plausible that optimal LM knowledge augmentation approaches will balance the intrinsic, parametric knowledge stored in LMs’ weights with supplementary, non-parametric knowledge that they can retrieve on demand. Time will tell, of course, but the current RAG and finetuning research momentum suggests that imparting LMs with new knowledge remains both an urgent and tough nut to crack.