Crafting AI Commands: The Art of Prompt Engineering

Nithanth Ram

The tech zeitgeist continues to be characterized by remarkable breakthroughs in artificial intelligence, the ultimate outcomes of which are still very much uncharted. Advancements in Generative AI have played an especially prominent role in bringing the entire AI field to the public’s attention. Previously, machine learning models were largely restricted to predictive capabilities and were much more deterministic. With the advent of architectures like GANs, VAEs, and Transformers, Generative AI was able to come to fruition. These models are able to generate original outputs by referencing and learning from a large corpus of data.

Generative AI isn’t a completely new phenomenon. The tech has existed for the better part of a decade. However, curating larger datasets and constructing large-scale models has helped to better denoise generative models and produce more quality results. If you have an internet connection, chances are you’ve probably messed around and created some trippy images using models like StableDiffusion or asked ChatGPT to help write your essay that’s due at midnight. (I personally can’t wait to use Github’s Copilot again after trying it out with a few of my project repositories.) Given the capabilities of these Generative AI systems, it’s almost hard to believe that we’re still in the early days of what feels like a technological revolution.

Generative AI models vary when it comes to input and output modalities and there’s a lot left to figure out, but one thing is certain: text is a powerful interface. The models that have been successfully productized by companies all leverage a well-designed text user interface. This is pretty intuitive since a text-based UI is one of the most concrete and direct channels of human-computer-interaction. Text-interfacing generative models all require the user to craft written inputs—called “prompts”—for the model to interpret in order to produce a logical output. Large language models, text-to-image generators, and other text-driven systems are naturally very reactive to nuances in the prompt due to their size and context scope.

Eliciting a desired output is a challenging task when working with such a massive black box system. And as with any emerging field, there are new jobs to be done. Whether we’re talking about folks building and testing generative models, or end users trying to get the outputs that best suit their needs, there’s a whole new skill (and, heck, even job title) in town: Prompt engineering.

What is Prompt Engineering?

Prompt engineering, in a nutshell, is the discipline of iteratively figuring out how to best instruct a model for a desired output. As mentioned before, prompts are simply just language inputs written by the user that are fed to the model. While almost all prompts are composed of human language, a few unique models like OpenAI’s Codex and even ChatGPT, can process other textual prompts like code snippets. Prompt engineering aims to compile a set of principles and techniques to craft prompts that are best suited to eliciting rich and accurate responses from the respective model they’re working with.

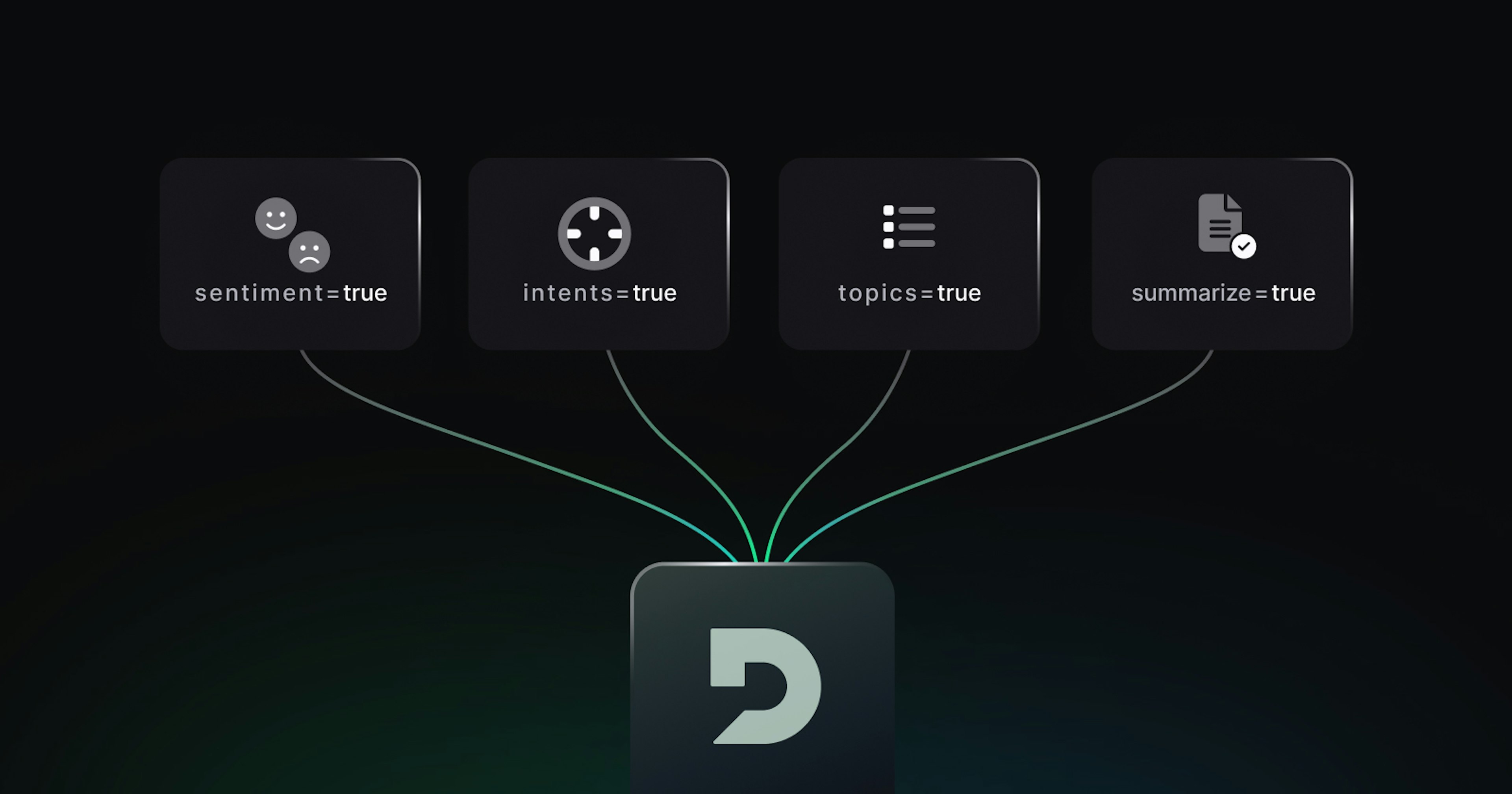

Crafting basic and effective prompts involves figuring out how best to instruct a model to output what you’re seeking. Using specific commands like “write,” “list,” “classify,” etc. will help guide the model to execute the requisite task. When prompting, precision is critical to avoid any error propagating from the model through to its response. LLM platforms such as OpenAI and Cohere all have pretty good playbooks for prompt engineering. There are more advanced techniques discussed such as recursive prompting, zero-shot and few-shot prompts, and chain-of-thought (CoT) prompting which can be used for more complex natural language generation tasks.

Prompt engineering doesn’t just deal solely with sculpting prompts. A user can tweak model parameters to yield different results from their prompts. For example, altering the temperature of a model can control how a language model picks its next token. The lower the value of the temperature parameter, the more deterministic the response will be since the highest probability token in the sequence is selected. Increasing the temperature of a model could introduce more variance and creativity in the response. If you wanted to use a model for factual and laconic responses such as Q&A or summarization, you could lower the temperature of a language model. But if you wanted to just wax poetic, increasing the temperature of a model could provide more desirable responses.

The security and ethics of a Generative AI model also needs to be heavily considered during prompt engineering. Biases in the training data as well as unethical requests need to be masked by the underlying model. To reiterate: Text is a powerful interface. Powerful doesn’t always mean better. Prompting is still such a nebulous way to interact with models. It’s very challenging to hardcode safeguards in the interface to prevent users from maliciously using generative text-input models. Prompt engineering also deals with mitigating this risk. It’s crucial to understand how adversarial prompts can potentially bypass layers of security in the model. Discovering vulnerabilities like specific prompts that leak sensitive information through prompt injection, or jailbreaking a model in order to produce a response from unethical instructions are of paramount consideration.

Learn to Crawl Before You Can Walk

Is prompt engineering the first of a new class of jobs born directly from AI innovation? It sure feels that way. It’s indisputable that writing good prompts is a skill that delivers a lot of value to companies looking to leverage LLMs and integrate them into their products. To paraphrase Sam Altman, prompt engineering is like programming with natural language. Companies like Scale AI and Anthropic AI have started looking for people to focus their efforts on hacking with prompts to improve the efficacy of their proprietary models.

However, prompt engineering as a discipline has only been around for a few years. We’re so early in the Generative AI trajectory that it’s hard to predict what prompt engineering may look like in the near future. Prompt engineering in its current state is very much an art not a science, but that could very well be subject to change. To get the most out of these models, it is important to prompt deliberately and explicitly. Much like how typing a single word into a search engine like Google is going to generate a lot of “noisy” search results, being vague with an LLM prompt is likely to result in similarly vague outputs.

Models will continue to evolve and adapt to more complexity and constraints. Just as machine learning techniques like hyperparameter tuning and cross validation became more abstracted, it's possible that a lot of the work that goes into prompt engineering may experience a similar reduction. Researchers are currently working on techniques for auto-prompting by automatically selecting an optimal prompt for the task at hand. For all we know, the duties of a prompt engineer could be absorbed by the conventional machine learning engineer.

Will AI Eat My Job?

The emergence of the prompt engineering discipline pulls on a speculative, albeit anxious, thread of thought for many people. How is AI innovation going to affect our jobs? A recent paper from Eloundou et al. found that “[...] approximately 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of [generative pre-trained transformer models], while around 19% of workers may see at least 50% of their tasks impacted.”

Looking at the broader context of the AI disruption, it’s understandable to view the societal implications of frequent innovation. On one hand, it is justified for people to fear a reduction in wages along with job displacement due to the rise of automation. AI, especially generative, multimodal models, has demonstrated the potential to handle many tasks that are performed manually today. The anxiety expressed by many that AI is going to “eat” their jobs isn’t far-fetched. However, history shows that technological innovations almost always create a net positive outcome.

Previous cycles of innovative disruption dating back to the industrial revolution, the rise of automobiles, and the inception of Silicon Valley all, in the long-run, had a net positive effect on jobs created. The workforce either shifted to new occupations or subsumed new responsibilities created by innovation within their existing occupation. The World Economic Forum estimates that in the next decade, AI will create an increase in global GDP of 26% and produce a net increase of 12 million jobs. This forecast, combined with historical trends, should assuage a lot of concerns regarding the erosion of the workforce due to rapid AI innovation.

Cycles of innovation always spawn new categories of work to be done. Think of all the different occupations created as a result of new hardware and software. We now have iOS and Android-specific mobile developers and engineers well-versed in Rust and Haskell contributing to the Crypto space. Increasing the capacity of these occupations allows more opportunity for the global workforce to become specialized.

Prompt engineering, whether it’s a short term phenomenon or not, is a positive signal to quell the panic over losing jobs to AI. Similar to how scaling the LLMs reveals new latent abilities, prompt engineering is a demonstration of how more investment and advances in the field will either produce entirely new jobs or empower existing ones. For ethical reasons and safety, we can expect some degree of human oversight and in-the-loop interaction with these systems, never fully committing to complete oblivious automation. If anything, tools like LLMs and skills like prompt engineering will empower and augment workers with more efficiency and productivity