Top 5 Machine Learning Frameworks for 2023

Jose Nicholas Francisco

The hype around machine learning and deep learning has just hit a fever pitch. With services like ChatGPT, the world is waking up to the possibilities unlocked by machine learning, and nearly every facet of our daily lives will be affected.

So how can we, as devs, take advantage of these new tools?

Well, aside from asking a robot to code our websites for us, we can add artificial intelligence, machine learning, and deep learning—or, as the cool kids say: AI, ML, and DL—to our projects using tools that are simultaneously extremely powerful and easy to use.

Adding ML to a software project can help automate processes, improve accuracy (where applicable), and reduce costs. Machine learning algorithms can be used to identify patterns in data, detect anomalies, and make predictions in almost every facet of the product lifecycle. Further, you can use ML to spot anomalies in everything from customer data to machine shop outputs. This can help software projects become more efficient and effective, as well as reduce the amount of manual coding and QA required. Additionally, machine learning can help improve customer experience by providing more personalized services and recommendations.

What is an ML/DL framework?

A ML/DL framework is, quite simply, a method to process data through a powerful AI model. For example, you can now process sound through powerful speech recognition models. That is, models that can discern speakers, transcribe noisy audio, and detect languages on the fly. You can add a tool like this to almost any app—from a video game that recognizes what you say, to a virtual librarian that can lookup and retrieve any information for you from the internet. Oh wait, that’s Google’s Bard.

Most of these frameworks connect to larger models—essentially black boxes generated by another computer program that models the required behavior. These models are available online and you can connect to many services that will run your output through a particular model. You can also train models on various inputs, be they images, text, or audio.

However, even if you’re already a coding savant, creating AI models is not easy. Experts recommend first defining your problem. For example, maybe you want to automatically assess the condition of a car from a set of photographs. This assumes that these photographs would show some kind of damage to the fender or body panels or, if we’re getting into the weeds, images of metal fatigue on an engine.

Once you define the problem, you’ll need to gather data. Lots of data. Preferably gigabytes. This could include hundreds or thousands of photos from online sources, images and videos you shoot yourself, and test images that define various situations. Note that the famous ChatGPT used a behemoth of a dataset known as “The Pile” to learn language. This Pile included all of Wikipedia, numerous novels, and even github.

And now, this is where the machine learning framework comes in. You’d use an ML/DL framework to create and train your algorithms. You will set scores to identify things like noticeable damage or dangerous breaks in the metal, for example, and then feed those back into the model. In particular, whenever the computer gives an output that you like, your code will reward it—perhaps even shower it—with reward-points. But whenever the computer gives an output that isn’t exactly desirable, you punish it with a “loss” and tell it to continue learning.

Through this reward-and-punishment system, your computer will transform its (initially) random outputs generations into educated guesses. And soon those educated guesses will turn into extremely precise and nuanced calculations. And finally, with enough training data, the AI can start making predictions based on your input.

Finally, once you’ve trained and coded your model, you’ll want to keep an eye on it as it does its work. In the end, a model and a framework are only as good as their handlers have trained them to be, so it’s definitely a lot of fun and fascinating work.

So what framework should we use? Here are a few of the most well-known frameworks, in no particular order.

Top Machine Learning Frameworks

PyTorch

PyTorch is a framework designed by Meta (née Facebook). It was designed to make neural networks and AI algorithms as easy-to-use as possible. As the first syllable of the name implies, Pytorch is a Python library. And within the library, you’ll find numerous features for whatever situation or problem you’re trying to solve. For example, if you want to analyze spectrograms and music, torch.audio is a great way forward.

Major companies like Twitter, Amazon, and Deepgram openly use PyTorch because it is so intuitive and efficient. What’s more is that a special little bonus library comes with it: Pytorch Lightning is an open-source Python library for machine learning. It's a lightweight wrapper that makes your ML code easier to read, log, reproduce, scale, and debug. It takes tough-to-read boilerplate code and helps you turn it into a simpler version of itself.

Like most Python libraries, Pytorch works as a front end for most popular models and even has its own built-in interfaces like torch.nn to work with neural networks, and the aforementioned torch.audio to help with audio parsing.

Facebook's AI research group developed Pytorch for deep learning applications such as natural language processing and computer vision. It is highly scalable, allowing developers to quickly and easily build complex models. Pytorch is popular among developers because it is easy to learn and use, and provides a high level of flexibility and speed. It also has a strong community of developers who are constantly improving the framework.

TensorFlow

TensorFlow is an open-source machine learning framework developed by Google. It is used for numerical computation and large-scale machine learning. It is designed to be flexible, efficient, and portable, allowing developers to easily build and deploy machine learning models. It brands itself as an end-to-end ML platform that will allow programmers “of every skill level” to find Machine Learning solutions to various problems.

If you’ve ever used Google Translate or Gmail’s spam filter, then congratulations, you’ve used a TensorFlow-supported app!

But outside of Google, TensorFlow is used by many companies, including Airbnb, Intel, Dropbox, and Uber. It is also used by many research institutions, such as Stanford, MIT, and Harvard. TensorFlow is popular because it is easy to use and provides a wide range of features, such as automatic differentiation, distributed training, and visualization tools.

Matlab

Matlab ML framework is a powerful tool for developers to develop machine learning models. Much like the others, it provides a comprehensive set of tools for data preprocessing, feature engineering, model training, and model evaluation. It also offers a wide range of algorithms for supervised and unsupervised learning, as well as deep learning.

Much like TensorFlow, Matlab also brands itself as “accessible whether you are a novice or an expert.”

But what distinguishes Matlab from the other frameworks on this list is its pedagogical value. Matlab tends to be the framework of choice in university classrooms and research groups. Some (read: I) may even argue that it has one of the aesthetically best visualizations and graphics of all the frameworks.

However, Matlab isn’t just for college. Major companies still love it: Matlab ML framework is used by many large companies, such as Microsoft, Google, Amazon, and IBM, to develop and deploy machine learning models.

Keras

Keras is a high-level open source neural network library written in Python. It is designed to enable fast experimentation with deep neural networks. It is capable of running on top of TensorFlow, Microsoft Cognitive Toolkit, Theano, or PlaidML. Keras allows for easy and fast prototyping, supports both convolutional networks and recurrent networks, and runs seamlessly on CPUs and GPUs.

Developers use Keras because it is a powerful and easy-to-use library for building deep learning models. It is designed to make deep learning more accessible and easier to use for developers of all skill levels. It also provides a high-level API for building complex neural networks, making it easier to experiment with different architectures.

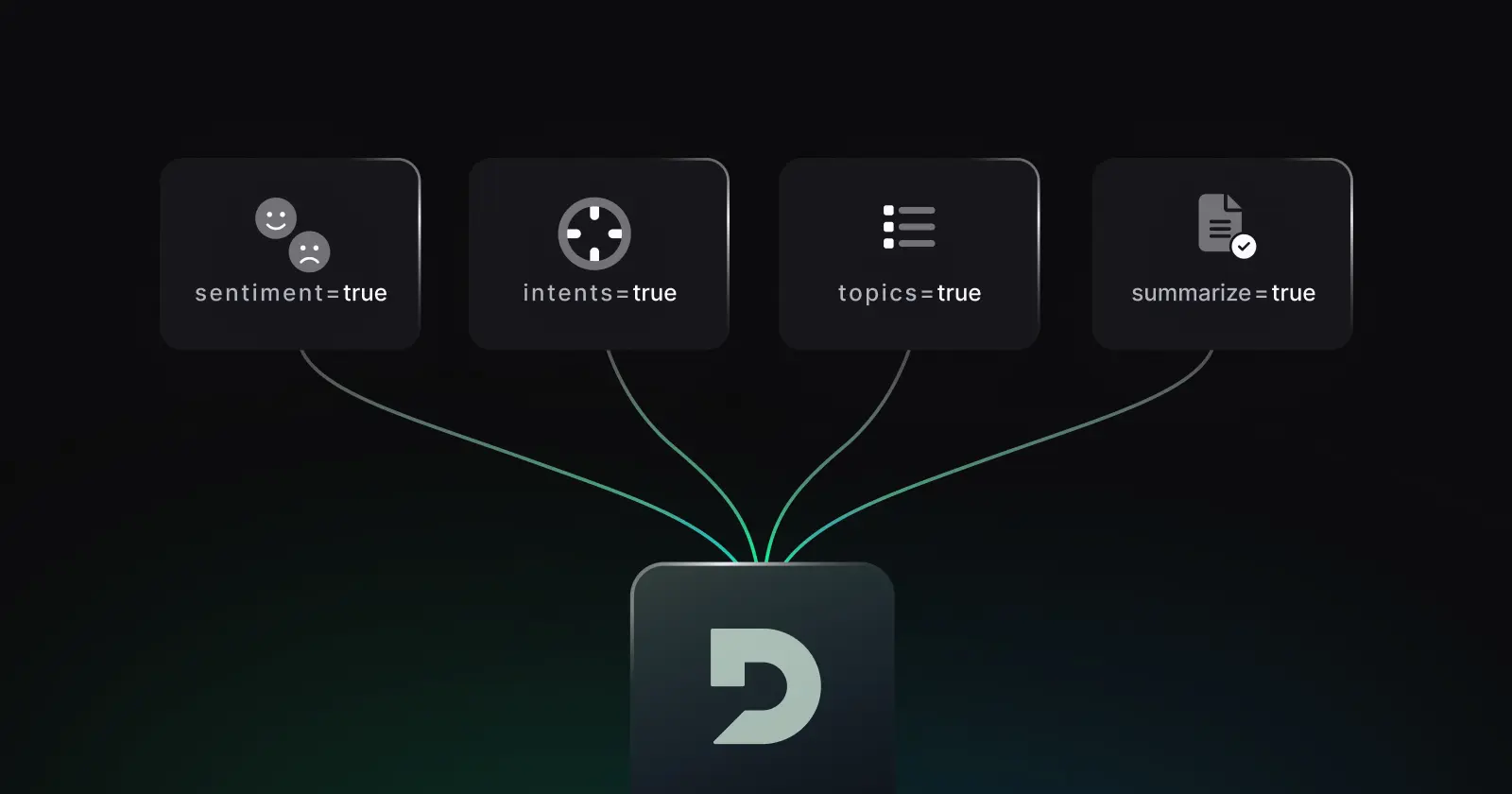

NASA—a group of really smart people and literal rocket scientists—regularly uses Keras. And Deepgram does too.

Google uses Keras for its TensorFlow deep learning library, Microsoft uses it for its Cognitive Toolkit, Amazon uses it for its Amazon Machine Learning service, and Apple uses it for its Core ML framework.

Scikit-learn

So here’s the story: So the SciPy Toolkit was designed to be really good at linear algebra. Fun fact: AI and ML are, like, 80% linear algebra underneath the hood. Sooo that makes sci-kit learn sexy from a mathematical and efficiency perspective. After all, if SciPy is good at linear algebra, it must be a fantastic foundation for AI algorithms. Algorithms like…

Linear regression and logistic regression

Example application: Given a bunch of houses and their prices, we can use these algorithms to predict the price of a new house.

Binary classification

Example application: Spam filter. Is a given email in your inbox either (1) Spam or (2) NOT spam?

N-ary classification

Example applciation: Same as the spam filter, but with more than just two categories. Think "street sign identifier". Given an image, is that image either a Stop sign, or a Do Not Enter sign, or a Yield sign, or a Speed Limit sign?

Clustering

Example application: Given a bunch of messy data, find the best way to group them. Maybe you want to group Netflix users together based on similar tastes. Or group different bacteria within the same colony.

Dimensionality reduction,

Also known as squishing VERY dense amounts of data into a smaller amount of space without losing too much crucial information.

Much more

As such, Scikit-learn is a popular open source machine learning library for Python. It is designed to be easy to use and efficient, and it provides a wide range of algorithms for classification, regression, clustering, and more. It is also highly scalable, making it suitable for large-scale data analysis.

Scikit-learn is a great choice for developers who want to quickly and easily build machine learning models. It is easy to use and provides a wide range of algorithms, making it suitable for a variety of tasks. It is also highly scalable, making it suitable for large-scale data analysis.

Note that Spotify uses both Scikit-learn and Deepgram 😉

Roll your own

Building on any of these platforms is easy and definitely worth the amount of effort needed to become conversant in machine learning. By learning these platforms now you’ll be ready to walk into any meeting where your boss muses, “We should probably look into this AI stuff.” With a little preparation—and a little learning—you can be ready to roll in a few hours.